Most Popular

Start Routing Notifications Today!

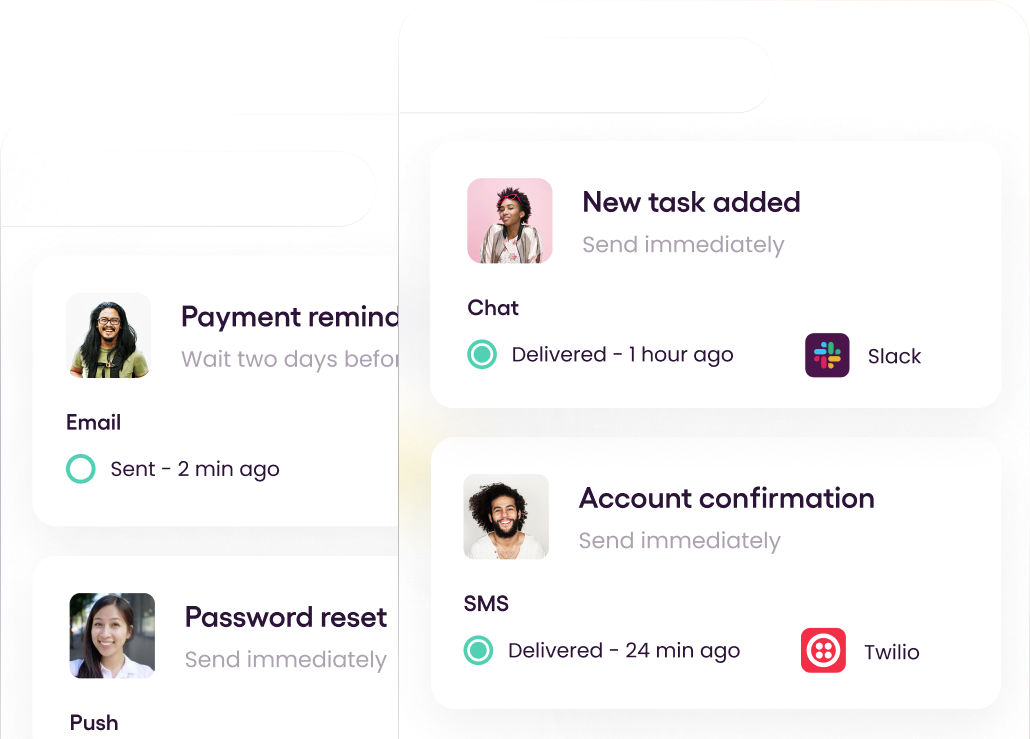

Courier is a notification service that centralizes all of your templates and messaging channels in one place which increases visibility and reduces engineering time.

Sign-up

Build a WebAssembly Language for Fun and Profit: Parsing

In the last post of this series on how to build a WebAssembly programming language, we constructed a lexer. In this post, we’ll cover the next phase of our compiler, parsing. Parsing is the portion of our compiler that takes the token stream generated by the lexer and converts it into an abstract syntax tree (AST).

An AST is a tree-like data structure that organizes the tokens into a logical hierarchy that can more easily be translated into machine code. Thankfully, because wispy is an S-expression language, our code is essentially already an AST. Take the following stream of tokens:

1“(“, “add”, “3”, “(“, “sub”, “2”, “1”, “)”, “)”

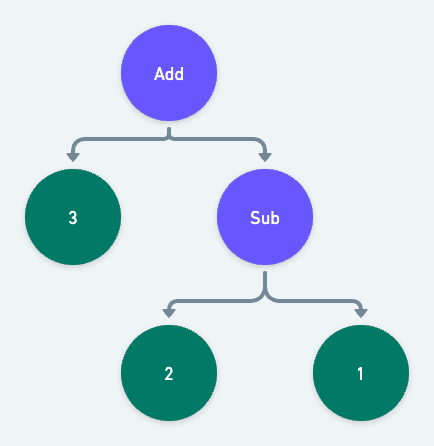

Each set of parentheses represents a subtree, where the first token is the operator node and the following tokens are its operands. If we run into another opening parenthesis before the current set is closed, we know it represents an operand that itself is a subtree. The above stream of tokens would be organized into a tree that looks like this:

If you're interested in writing a parser for a more complex C-like syntax, see my previous Building A Programming Language series.

More About AST

As we did with the lexer, we'll start by defining our types. These types will define the structure of our AST. Each type represents a “Node”, the circle from our diagram in the intro. Here are the basic nodes. We'll gloss over them, as they aren't a lot different from the tokens we defined in the lexer:

1// src/types/ast.mts23export type IntNode = {4type: "int";5value: number;6};78export type FloatNode = {9type: "float";10value: number;11};1213export type IdentifierNode = {14type: "identifier";15identifier: string;16};1718export type TypedIdentifierNode = {19type: "typed-identifier";20// Note that here, we break down the identifier into its components21identifier: string;22typeIdentifier: string;23};

A new concept to the AST is the BlockNode. A BlockNode is an expression made up

of a group of other nodes.

For example, (add 1 2) is a block of three nodes:

- An identifier that evaluates to a function,

add. - An Int that simply evaluates to the number

1. - An Int that simply evaluates to the number

2.

How the block itself gets evaluated is up to the compiler. We'll get to that in the next post.

Here's the definition:

1// src/types/ast.mts23export type BlockNode = {4type: "block";5expressions: AstNode[];6};

Finally, we define the AstNode. Like the Token type from the lexer, AstNode is a discriminated union that can be one of any other node we previously defined:

1export type AstNode = IntNode | FloatNode | IdentifierNode | TypedIdentifierNode | BlockNode;

You may have noticed that BlockNode has an array of AstNodes, Since AstNode can be a BlockNode, BlockNodes can contain child BlockNodes. In other words, BlockNode is a recursive type. This ability to recursively represent children that can have children is the foundation of our AST. It's where the tree in AST is allowed to form.

At this point src/types/ast.mts is finished and should look like this file.

Now export the types from src/types/index.mts as we did with the token types:

1// src/types/index.mts2export * from "./token.mjs";3export * from "./ast.mjs";

Constructing the AST

Now that we've defined the AST, it's time to build one.

Create a new src/parser.mts file and add all the imports we'll use:

1// src/parser.mts2import {3Token,4IdentifierNode,5TypedIdentifierNode,6IdentifierToken,7TypedIdentifierToken,8FloatToken,9FloatNode,10IntToken,11IntNode,12AstNode,13BlockNode,14} from "./types/index.mjs";

Now we can define our top level parse function. The parse function takes the tokens

generated by the lexer and returns a BlockNode that acts as our tree’s root.

1// src/parser.mts2export const parse = (tokens: Token[]): BlockNode => {3const blocks: BlockNode[] = [];45// This loop is run as long as there are tokens to consume6while (tokens.length) {7// consumeTokenTree converts an array of tokens into a tree of tokens, more on that later.8const tree = consumeTokenTree(tokens);910// parseBlock turns our new tree of tokens into an actual BlockNode, recursively. More on that later as well.11blocks.push(parseBlock(tree));12}1314// Finally we return the top level BlockNode15return {16type: "block",17expressions: blocks,18};19};

Next we define the consumeTokenTree function. consumeTokenTree converts a flat array of tokens, into a tree of tokens.

Given this wispy expression:

1(add (sub 3 1) (sub 5 2))

The lexer will produce this array of tokens:

1// Note: I've simplified the Token format to just be strings to keep things short2["(", "add", "(", "sub", "3", "1", ")", "(", "sub", "5", "2", ")", ")"];

consumeTokenTree will take that flat array and turn it into a tree. This is as simple

as putting every token in between a set of bracket tokens () into an array. So our

token array from above becomes this token tree:

1["add", [, "sub", "3", "1"], ["sub", "5", "2"]];

Here's the actual definition of consumeTokenTree:

1// src/parser.mts23// This is token besides for the bracket tokens4export type NonBracketToken = Exclude<Token, "parenthesis" | "square-bracket">;56// The token tree is made of NonBracketTokens and other TokenTrees7export type TokenTree = (NonBracketToken | TokenTree)[];89const consumeTokenTree = (tokens: Token[]): TokenTree => {10const tree: TokenTree = [];1112// Ensures the first token is a left bracket and then discards it, defined below this function.13consumeLeftBracket(tokens);1415while (tokens.length) {16// Preview the next token17const token = tokens[0];1819// Check to see if the next token is a left bracket.20if (token.type === "bracket" && getBracketDirection(token) === "left") {21// If it is, we just ran into a sub-TokenTree. So we can simply call this function within22// itself. Gotta love recursion.23tree.push(consumeTokenTree(tokens));24continue;25}2627// Check to see if the next token is a right bracket28if (token.type === "bracket" && getBracketDirection(token) === "right") {29// If it is, we just found the end of the tree on our current level30tree.shift(); // Discard the right bracket31break; // Break the loop32}3334// If the token isn't a bracket, it can simply be added to the tree on this level35tree.push(token);3637// Consume / discard the token from the main tokens array38tokens.shift();39}4041// Return the tree. Don't forget to check out the helper functions below!42return tree;43};4445const consumeLeftBracket = (tokens: Token[]) => {46const bracketDirection = getBracketDirection(tokens[0]);4748if (bracketDirection !== "left") {49throw new Error("Expected left bracket");50}5152return tokens.shift();53};5455const getBracketDirection = (token: Token): "left" | "right" => {56if (token.type !== "bracket") {57throw new Error(`Expected bracket, got ${token.type}`);58}5960// If we match a left bracket return left61if (/[\(\[]/.test(token.value)) return "left";6263// Otherwise return right64return "right";65};

Now that we have a token tree, we need to turn it into a block. To do so, we create a

parseBlock function that takes the tree as its input and returns a BlockNode:

1const parseBlock = (block?: TokenTree): BlockNode => {2return {3type: "block",4// This is where the recursive magic happens5expressions: block.map(parseExpression),6};7};

As you may have noticed, parseBlock maps each item of the tree with a yet to be written

parseExpression function. parseExpression takes either a TokenTree or a NonBracketToken and transforms it to its corresponding AstNode type.

Here's the definition:

1const parseExpression = (expression?: TokenTree | NonBracketToken): AstNode => {2// If the expression is an Array, we were passed another TokenTree, so we can3// pass the expression back to the parseBlock function4if (expression instanceof Array) {5return parseBlock(expression);6}78// The mapping here is pretty straight forward. Match the token type and pass the9// expression on to a more specific expression parser.10if (isTokenType(expression, "identifier")) return parseIdentifier(expression);11if (isTokenType(expression, "typed-identifier")) return parseTypedIdentifier(expression);12if (isTokenType(expression, "float")) return parseFloatToken(expression);13if (isTokenType(expression, "int")) return parseIntToken(expression);1415throw new Error(`Unrecognized expression ${JSON.stringify(expression)}`);16};

Let's define the isTokenType function. This function is pretty neat and demonstrates

one of the most powerful features of TypeScript, custom type guards. Simply put, isTokenType tests the expression and narrows down the type to a specific TokenType. This allows TypeScript to be certain we are passing the correct tokens to their corresponding parser functions down the line.

Here's the definition:

1export const isTokenType = <T extends Token["type"]>(2item: TokenTree | NonBracketToken | undefined,3type: T4): item is Extract<Token, { type: T }> => {5return isToken(item) && item.type === type;6};78const isToken = (item?: TokenTree | NonBracketToken): item is NonBracketToken => {9return !(item instanceof Array);10};

There's a lot happening there, so let's walk through it. First up, we have a generic definition,

<T extends Token["type"]>. This is essentially saying that T must be one of the possible values

of the Token.type field. Typescript is smart enough to know that means T must be one

of "int" | "float" | "identifier" | "typed-identifier" | "bracket.

The next interesting piece of code is the return type predicate item is Extract<Token, { type: T }>. This predicate tells TypeScript that if the return value of isTokenType is true, then item must be the Token whose type matches the string passed as the type parameter.

In practice that means that if we were to pass an unknown Token to isTokenType, typescript

will be able to correctly narrow the value to a more specific token, like IntToken.

Now that we have our custom type guard defined, we can define the actual token parsers. The first three are simple; they essentially just return a copy or slightly modified copy of the token:

1const parseFloatToken = (float: FloatToken): FloatNode => ({ ...float });23const parseIntToken = (int: IntToken): IntNode => ({ ...int });45const parseIdentifier = (identifier: IdentifierToken): IdentifierNode => {6return {7type: "identifier",8identifier: identifier.value,9};10};

The final parser is the parseTypedIdentifier. Remember that a typed identifier takes the form

identifier:type. Parsing it is as simple as splitting the string by the colon. The first value

of the returned array is the identifier, the second is the type.

Here's the definition:

1const parseTypedIdentifier = (identifier: TypedIdentifierToken): TypedIdentifierNode => {2const vals = identifier.value.split(":");34return {5type: "typed-identifier",6identifier: vals[0],7typeIdentifier: vals[1],8};9};

That's all the code required for a working parser. Before we move on, let's update the main

src/index.mts file to view the output of the parser:

1// src/index.mts2#!/usr/bin/env node3import { readFileSync } from "fs";4import { lex } from "./lexer.mjs";5import { parse } from "./parser.mjs";67const file = process.argv[2];8const input = readFileSync(file, "utf8");910const tokens = lex(input);11const ast = parse(tokens);12console.log(JSON.stringify(tokens, undefined, 2));

Build and run project:

1npx tsc2wispy example.wispy

If all goes well, the output should look like this.

With that, the parser is finished. We can now convert the stream of tokens from the lexer into an AST. In the next post, we can get into the juicy bits: generating and running machine-readable code.

Start Routing Notifications Today!

Courier is a notification service that centralizes all of your templates and messaging channels in one place which increases visibility and reduces engineering time.

Sign-up

More from Engineering

Simplifying notifications with the Courier iOS SDK

Push notifications are a valuable tool for keeping users informed and increasing their engagement with your app. You can use push notifications to alert users about promotions, new content, or any other important updates. While push notifications are a powerful tool, setting up push notifications in iOS can be a daunting task that requires a significant amount of effort and time. Fortunately, the Courier iOS Mobile Notifications Software Development Kit (SDK) simplifies this process.

Mike Miller

March 23, 2023

Building Android push notifications with Firebase and Courier’s SDK

Push notifications have become an essential part of modern mobile apps, allowing you to keep your users engaged and informed. However, implementing push for different platforms can be a complex and time-consuming task, requiring developers to set up and handle token management, testing, and other logistical details.

Mike Miller

March 21, 2023

Free Tools

Comparison Guides

Build your first notification in minutes

Send up to 10,000 notifications every month, for free.

Get started for free

Build your first notification in minutes

Send up to 10,000 notifications every month, for free.

Get started for free

© 2024 Courier. All rights reserved.